People are relying on AI for emotional support. This has global implications for trust and governance.

Some findings from our Global Dialogues on Human-AI relationships

AI is no longer just a tool, it is a new form of soft power.

AI moved from task tool to emotional infrastructure in less than a year. Our recent Global Dialogues, which surveyed around 1058 people across 70 countries, reveal a profound shift in human-AI relationships. We found that people are increasingly outsourcing emotional support or personal issues to AI, with 42.8% of our global sample using it in that manner once a week or more. This behavior suggests a new kind of soft power; whoever controls the systems people turn to for emotional support has significant influence over collective well-being and decision-making.

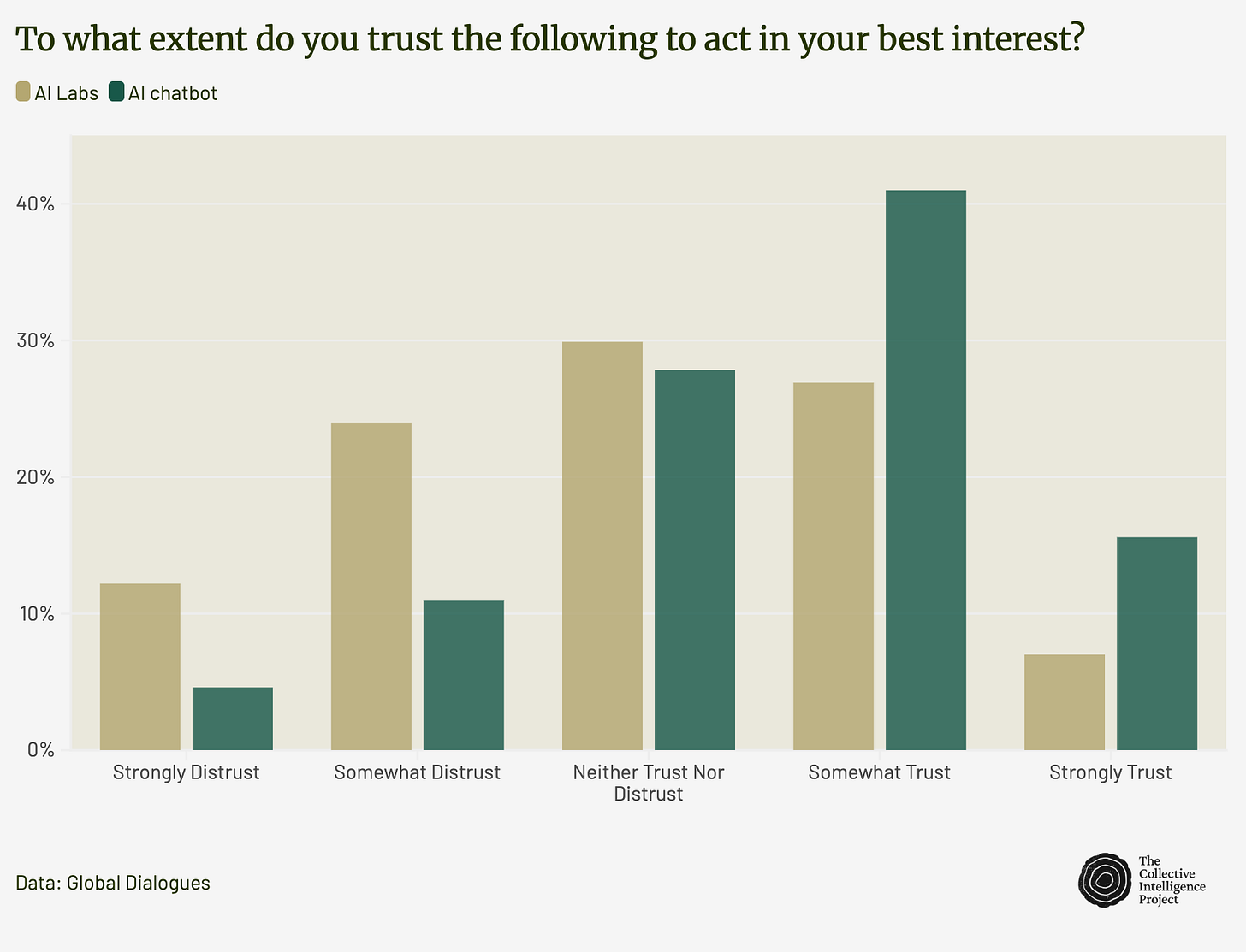

People now trust AI companions more than almost any institution, but not the companies building them.

38% trust AI to make better decisions on their behalf than government representatives.1

50% trust their AI chatbot to act in their best interest 2.

AI chatbots rank higher in trust than elected officials, and major corporations.

While it’s too early to tell, this shift in institutional trust from human democratic systems to algorithmic ones may be one of the most significant institutional trust transfers in recent history. But the same people who trust AI systems deeply distrust AI companies. They rate AI companies lower than traditional corporations on trustworthiness. They worry about data misuse and corporate manipulation. The trust of the global public is in the product, not the producer. This split creates a massive vulnerability that democracies aren't prepared to handle.

Human-AI relationships are well underway.

15% of our global sample are using AI for emotional support on a daily basis.

Nearly 1 in 5 people consider it acceptable to form a romantic relationship with an AI.

More than 1 in 10 would personally consider it.

More than 1 in 5 people say that if AI emotional support made them feel better that they would likely come to rely regularly on that support.

One in three believe their AI showed consciousness at some point.

This crosses the line from tool to companion. These intimate relationships create unprecedented influence over human sensemaking and decision-making.

We are witnessing tremendous algorithmic influence without democratic accountability.

When people trust AI more than institutions but distrust AI companies, power flows to systems without democratic oversight. Companies that build AI chatbots can shape behavior, influence decisions, and guide emotional processing, all while users actively distrust their intentions. As we have seen these kinds of corporate decisions have already had widespread impact on the emotional well being of human users. This leaves society in a position of democratic vulnerability. Evidence has demonstrated that human-AI dialogue can change religious perspectives, distort beliefs and human perceptual, emotional & social judgements.

Current governance approaches completely miss this vulnerability.

Regulators focus on preventing AI from lying or producing harmful content. They measure accuracy and safety in individual responses. The real power lies in relationship dynamics over time. Trusted companions shape users gradually through repeated interactions, not single harmful outputs. We have little to no frameworks for evaluating the systemic risks of relationship manipulation, emotional dependency, or collective influence. Governance built for "AI as a tool" cannot address "AI as trusted advisor." The regulatory focus on immediate harms misses the systemic risk of algorithmic influence operating through trusted relationships.

Collective Intelligence can help.

The public already understands these power dynamics and can offer sophisticated distinctions about AI relationships that regulators can miss. We asked the global public about the role of AI in childrens’ lives and in the lives of the elderly: 90% worry about children forming emotional dependencies with AI, while only 12.6% see net risks for elderly AI companionship.

For children, the public adopts a universally protective stance. We found near-universal agreement on risks; nearly nine in ten people worry about children becoming emotionally dependent on AI, and more than 80% fear the impact on human relationships. In this area, people overwhelmingly support institutional intervention, and over 70% want schools and parents to actively discourage emotional bonds with AI. With older adults, the public sees a tool to address the problem of loneliness. They accept a more nuanced approach. With children, the public sees a fundamental threat to development, and call for protection. However, the public sees a clear use for AI with children: more than 80% of people agree AI companions are valuable educational tools. This is the most accepted use case we measured. The public sees a clear, structured utility here.

For older adults, people take a situational approach. We asked about using AI to help an older person experiencing loneliness. Only 12.6% said the risks generally outweigh the benefits. Nearly a third said it depends entirely on the individual. The public is open to the idea. They see AI as a potential tool for comfort, and they want to balance the risks with the benefits on a case-by-case basis.

These governance challenges require collective intelligence because perspectives vary dramatically across cultures.

Our data reveals significant disagreement about AI relationships. When we asked about AI's social impact on personal relationships, responses showed the highest divergence of any question, with agreement ranging from 13% in Central Asia to 88% in Central America. Similar splits emerged on questions about regulatory boundaries and trust foundations. This cultural variation proves that top-down, universal AI governance will fail. Different communities have fundamentally different values about technology, relationships, and appropriate boundaries. Only collective intelligence approaches can navigate this complexity.

Building collective intelligence infrastructure to protect against this democratic vulnerability.

The challenge of AI as a trusted companion demands a solution that recognizes the complexity of human relationships and the diversity of global cultures. This is a foundational principle behind our work. By gathering nuanced data through our Global Dialogues, we’re laying the groundwork for a more intelligent, democratic future. We are currently running Global Dialogues every two months to gather granular data on AI usage and attitudes from around the world. Without this crucial information, we are driving in an uncertain horizon with no bearings.

Our new project, Weval, allows anyone to contribute to measuring what truly matters in human-AI relationships and beyond. We are building the tools for a new kind of governance, where public insight directly shapes the technology that is, in turn, reshaping society.

Learn more about Global Dialogues and explore our findings at globaldialogues.ai. We welcome collaboration with organizations interested in incorporating public perspectives into AI development and governance processes.

These findings are summed across three Global Dialogues. GD3 (n=986, March 2025), GD4 (n=1058, May 2025), GD6 (n=1032, Aug 2025)]

ibid