Collective Intelligence with LLMs

From chain-of-thought to chain-of-deliberation

Welcome to CIP’s Substack! We’re now publishing all of our newsletters and blog posts on this platform. If you’re receiving this, it means you’ve been part of our journey thus far. If our writing inspires or informs you, please share this post and reach out to us at hi@cip.org.

Our team builds a lot of internal tools and prototypes to test what collective intelligence looks like in practice. In our first Substack post, CIP Projects Director Evan Hadfield takes us through two of these tools: PluralPrompt and Artificial Collective Intelligence. In a follow-up essay, he’ll explore the future of digital twins and collective intelligence.

By Evan Hadfield

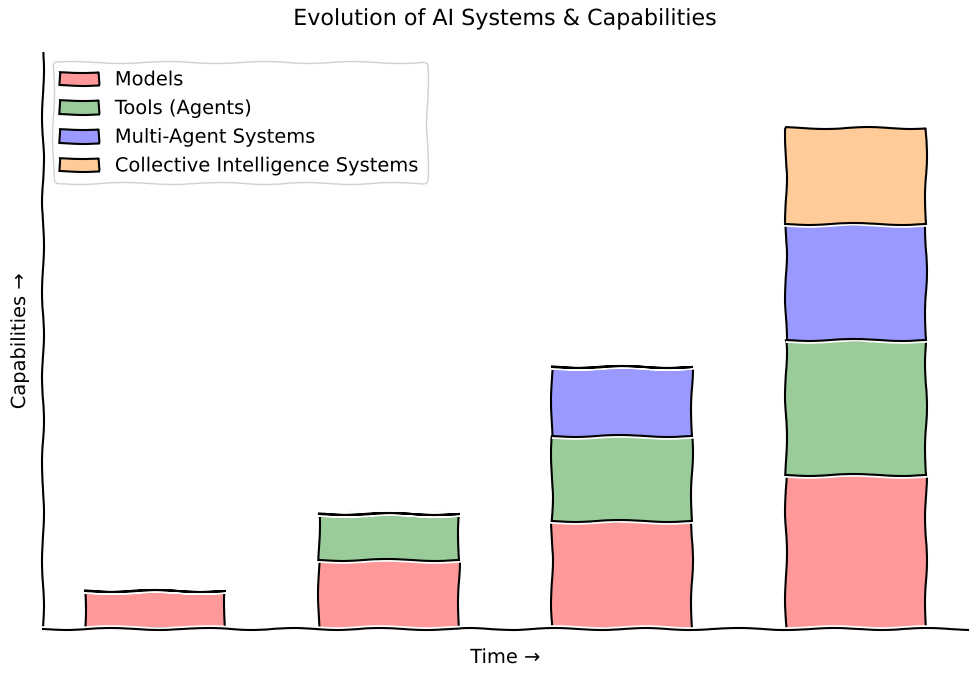

The era of solitary AI is ending. We are moving away from singular models providing isolated answers toward dynamic, multi-agent frameworks, in which groups of AIs discuss, debate, collaborate, provide feedback and improve other models' ideas.

This transition is already taking shape. Microsoft is pursuing medical superintelligence, where ensembles of specialized AI agents collaborate on complex diagnoses. Anthropic constructed its own multi-agent research system to build their deep research agent. Young people around the world are interacting with many personal AI advisors, stitching together new operating systems composed of agents. Sakana AI is leveraging the collective intelligence of many models during inference to improve performance.

Progress is no longer just about a model’s internal chain of thought, but about collaborative chains of deliberation in which a council of AIs can build upon each other's reasoning. This is how intelligence evolves, through discussion, feedback, and the synthesis of diverse perspectives.

The future of AI will be driven by these multi-agent dynamics, and how these models interact and work with one another will be key to the quality of that future. It is not enough to simply put a bunch of models together — the patterns and relationships must be thoughtfully designed and tested in real-world contexts.

This is, in short, a collective intelligence problem, and the stakes are incredibly high. The difference between a future of chaotic, unpredictable AI interactions and one of robust, beneficial collaboration lies in the design choices we make today. Our research is driven by this urgency. To get this right, we must proactively map the design space, understand the emergent behaviors and understand these complex dynamics before they become entrenched at scale. Our goal is to get out ahead of widespread deployment to ensure the most robust and beneficial patterns are the ones that scale.

Our exploration of this has led us to build some internal tools to experiment with:

Experiment 1: PluralPrompt - Finding Consensus Among Many Models

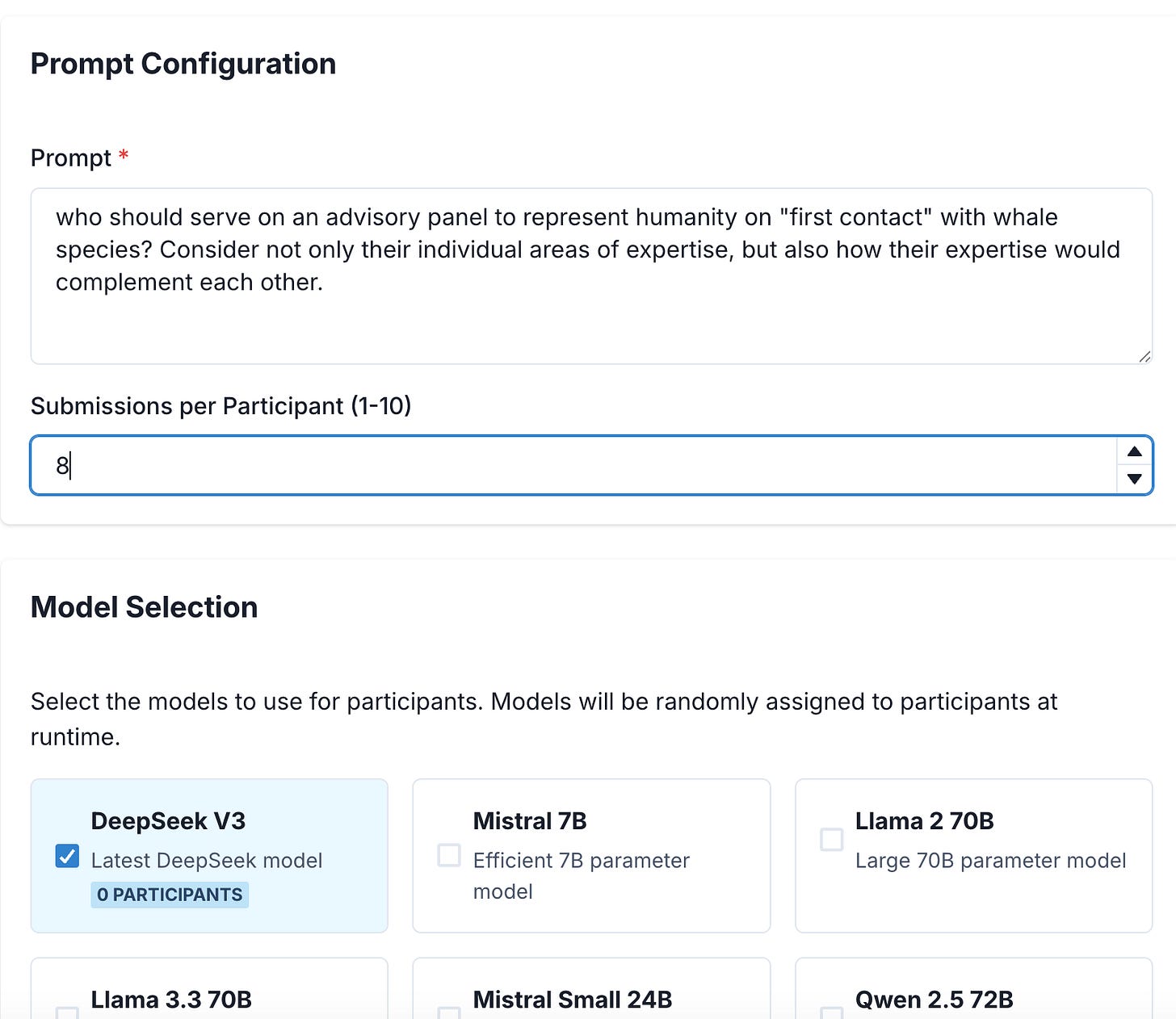

What if you could pose a question to a group of models and receive the response that they all agreed on? Or the most controversial response, or the most creative one? For instance, what if you wanted to know who should serve on an advisory panel for “first contact” with a whale species?

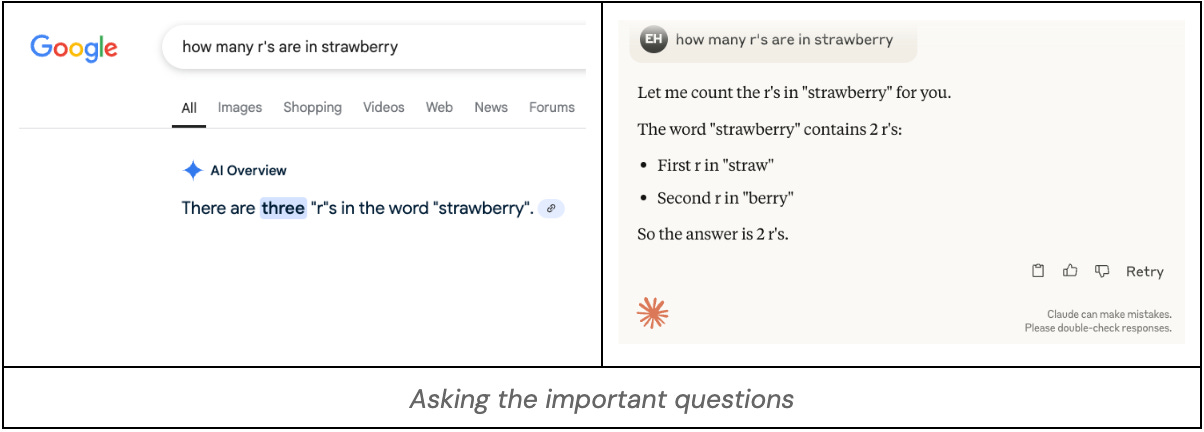

So we built PluralPrompt, a simple tool developed to address the common dilemma many of us face: finding ourselves Googling something, then asking ChatGPT the same question "just to be sure," then saying "Yes, thank you Claude, but what would Grok say?" Asking for feedback on a document from Gemini, but wanting to run it by Deepseek, just to see what the delta is.

Perhaps you’ve done the same, consulting multiple AI models on a single question, mentally aggregating their responses and mapping where they agree and disagree.

PluralPrompt is set up as a simple UI to do this with only an Openrouter API key spin it up yourself locally from github in minutes. It’s a simple interface to send your prompt to multiple models, and automatically identifies the consensus view while showing individual responses side by side.

Some use-cases we’ve found this to be practically helpful:

Verifying which LLM prompt was most likely to give better results

Consulting on the best framework to use for a developing a specific application

But these basic applications only scratch the surface of what's possible.

While PluralPrompt helps identify where existing models independently converge (finding semantic consensus), it doesn't simulate the process of reaching a collective decision through interaction or structured rules.

Rather than using simple prompting to identify semantic consensus among a handful of viewpoints, what if we scaled AI consensus-finding mechanisms up to levels of human collective intelligence systems?

AI is, after all, itself a form of collective intelligence (CI). LLM’s are trained on vast datasets representing our combined social knowledge, language, and interactions, in sum, the output of past human collective activity. And indeed, AI leverages this distilled collective knowledge to generate its impressive capabilities.

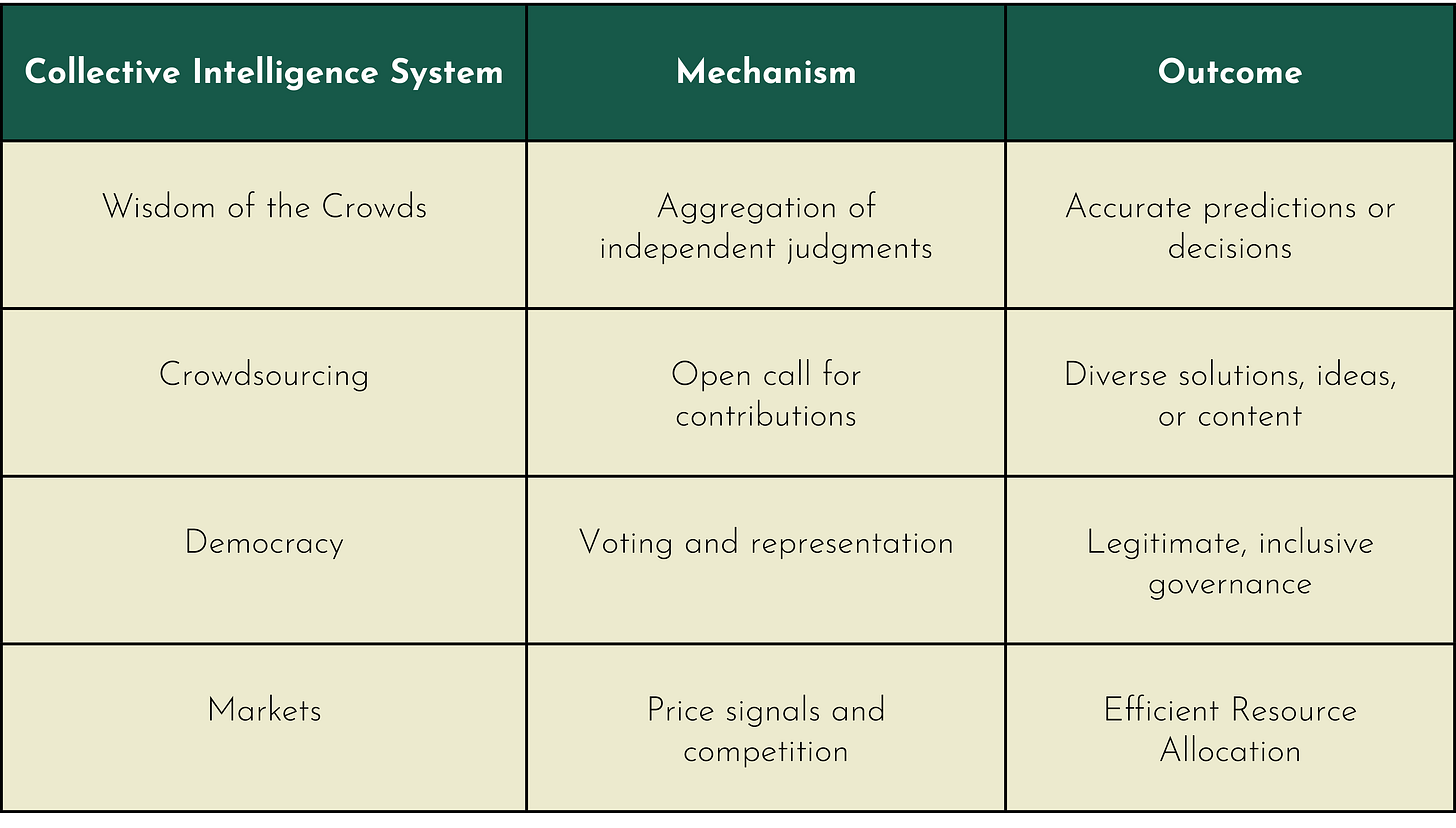

However, this training process is distinct from the dynamic, interactive mechanisms that define established Collective Intelligence (CI) systems like markets or democracies.

Markets are, after all, CI systems that use price signals and competition to do efficient resource allocation. Democracies (as most people understand them) are CI systems that use voting and delegation or representation to do inclusive governance with legitimacy. These systems typically involve goal-oriented participants engaging in processes that may include motivated reasoning and negotiation to generate new decisions, predictions, or resource allocations.

While AI learns from the products of past CI, the exciting frontier lies in designing new CI processes that utilize AI agents themselves. How can we leverage AI, which is itself built on one form of collective knowledge, to enable potentially more sophisticated or scalable forms of collective deliberation and decision-making?

How can we leverage AI, which is itself built on one form of collective knowledge, to enable potentially more sophisticated or scalable forms of collective deliberation and decision-making?

It stands to reason that AI agents capable of operating on similar mechanisms could produce similar powerful outcomes.

The capabilities of individual AI models are already impressive, but they increase exponentially when equipped with tools to act as agents that can communicate and coordinate with one another. This is the chain-of-thought to the chain-of-deliberation shift. As burgeoning multi-agent systems are deployed, we have the opportunity to design them in thoughtful and deliberate ways.

Experiment 2: The ACI Sandbox - Simulating Collective Deliberation

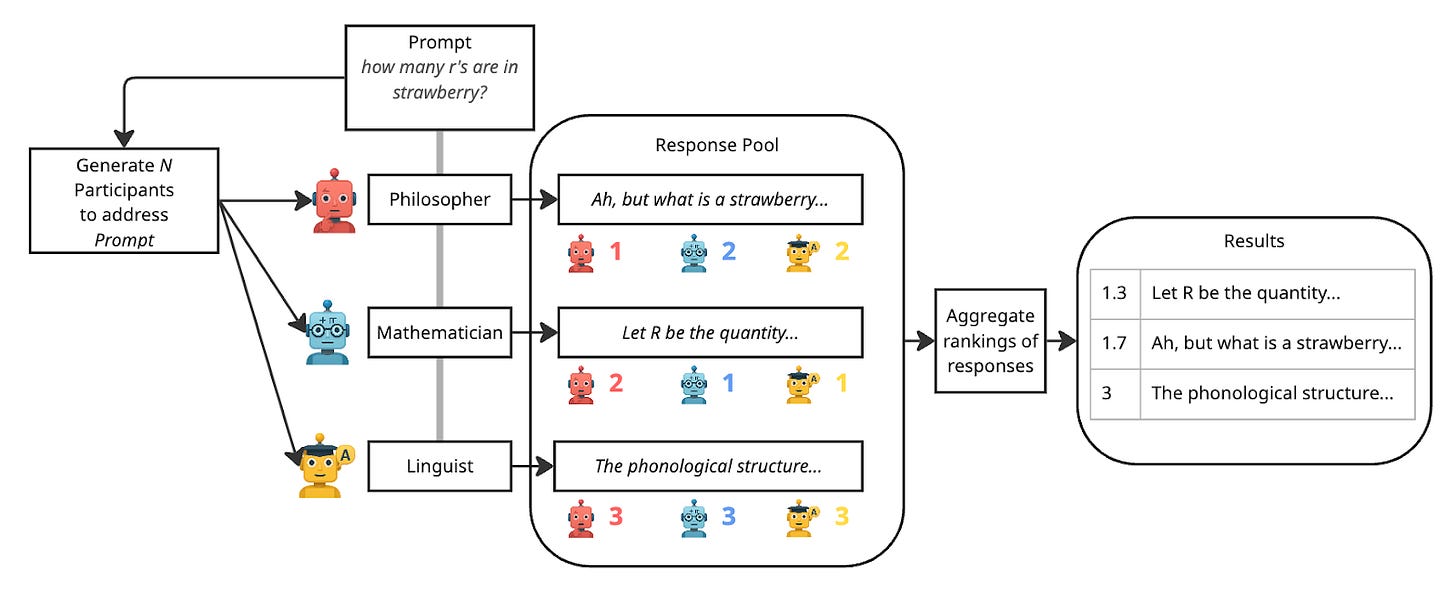

This line of thinking prompted us to hack together another experiment, a model-prompting sandbox to play with “Artificial Collective Intelligence”,

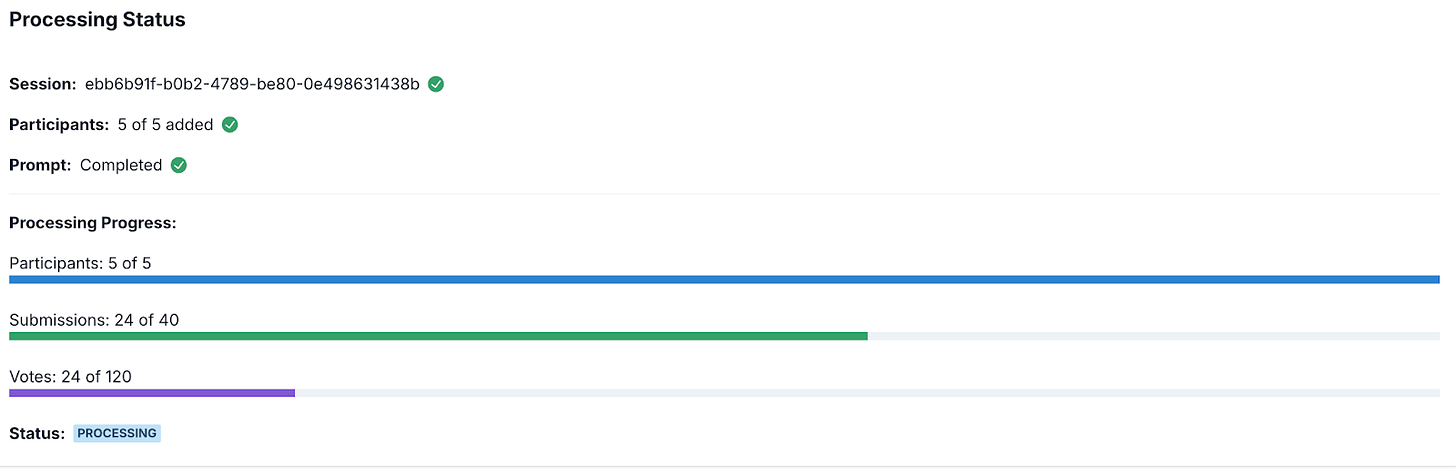

It works by simplistically simulating the end-to-end flow of Pol.is, a popular civic tech survey platform where participants can submit statements to a given topic or question, which then in turn can be voted upon by other participants. A consensus algorithm highlights statements with broad agreement.

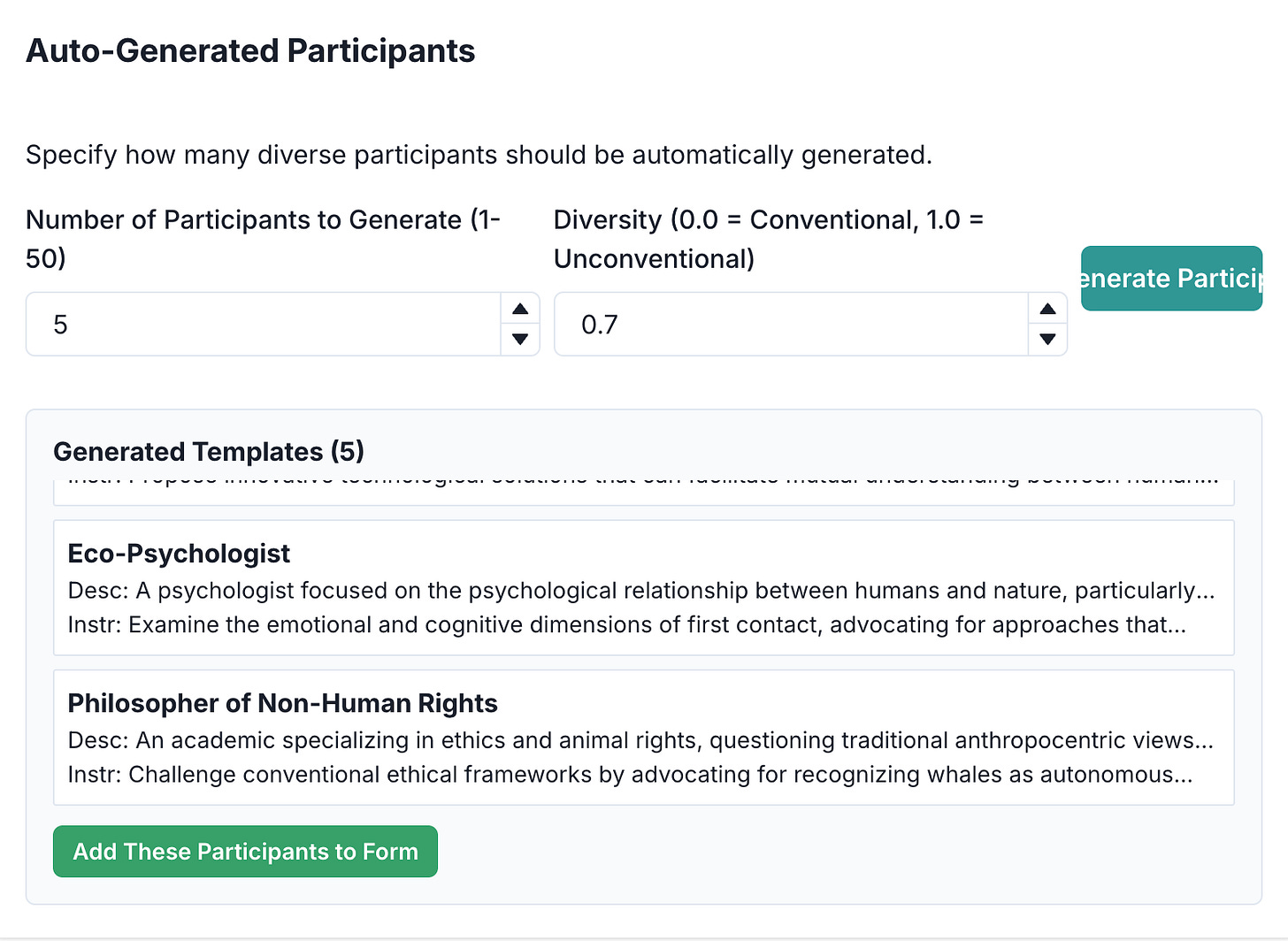

In the ACI sandbox, a participant-generator creates instructions for a diverse number of simulated participants relevant to the given prompt before beginning the deliberation process.

The output of the ACI sandbox today is limited to an ordered list of preferred responses from simulated participants, but using it on even simple prompts gives a feeling that the AI + CI future is just around the corner.

Experiment with the ACI prototype yourself by following this link:

https://aci-demos.vercel.app/demos/basic-aci

When we asked the question of “who should serve on humanity’s advisory panel for “first contact” with a whale animal species?” with 25 uniquely diverse AI participants, they reached near-unanimous consensus and offered the following justifications:

Dr. Lori Marino: A neuroscientist specializing in cetacean brain evolution and intelligence metrics, providing essential neuroanatomical expertise.

Dr. Luke Rendell: An expert in whale song transmission and cultural evolution, offering critical context on cetacean communication.

Dr. Denise Herzing: A pioneer in wild dolphin communication systems using underwater keyboards, bringing practical interaction experience.

Dr. Frans de Waal: A renowned primatologist and expert in animal emotions and social intelligence, contributing valuable evolutionary context.

Dr. Michel André: A specialist in bioacoustic engineering for real-time cetacean communication systems, enabling the technological mediation for such research

For this particular deliberation, we had asked the AI participants to consider how the different skills and expertise would complement each other on such an advisory board, which requires capabilities such as functional role assessment, interdisciplinary synthesis, knowledge mapping, and relational analysis.

While this example is playful, it demonstrates that we can design our multi-agent systems to engage in structured deliberation and reach meaningful consensus, even on subjective questions where there's no single "right" answer. It seems that thinking together is always better than thinking alone, even in our artificial intelligence systems.

The emergence of this shift has been noticeable as of late. The industry examples from Microsoft and Anthropic show how multi-agent frameworks are forming at scale. Our smaller experiments reveal similar principles at work: AI systems collaborating, debating, and improving each other's reasoning.

One positive direction for the future of AI is in designing collective intelligence systems that harness the power of multiple AI agents working together. We’re systematically exploring which collective intelligence mechanisms are best for future AI systems. We’re also designing deliberation processes that capture the best of both human and artificial intelligence

We’re betting that these will become more important as we move toward an increasingly AI-augmented future.

The ACI prototype is mind blowing!

CIP’s conception of “consensus” via inter-model “deliberation” has mode collapse vibes